Architecture Data Flow

Resources

You’ll need access to the appropriate groups to work with disruption data, please reach out to the TRT team for access.

DPCR Job Aggregation Configs(private repo)https://github.com/openshift/continuous-release-jobs/tree/main/config/clusters/dpcr/services/dpcr-ci-job-aggregation

Disruption Data Architecture

The below diagram presents a high level overview on how we use our periodic jobs, job aggregation and BigQuery to generate the disruption historical data.

It does not cover how the tests themselves are run against a cluster.

High Level Diagram

How The Data Flows

openshift-tests run disruption samplers, these run GET requests against a number of backends in the cluster every second and record the results to determine disruption. (see Testing Backends For Availability for more info)

To initially setup disruption data collection, this command

./job-run-aggregator create-tables --google-service-account-credential-file <credJsonFile>is run to create theJobs,JobRuns, andTestRunstables in big query. That command is idempotent – i.e., it can be run any time regardless of whether the tables are created or not and is part of thejob-table-updaterCronJob. Each of the “Uploader” CronJobs used in disruption data collection (alert-uploader,disruption-uploader,job-run-uploader, andjob-table-updater) requires theJobstable to exist.The

Jobs,JobRuns, andTestRunstables will already exist so no one should have to run that command unless theJobstable needs to be deleted/re-created. This is rare and only happens when we need to correct something in theJobstable (because big query does not allow updates to tables). TheJobRunsandTestRunstables should generally be preserved because they contain historical disruption data.If someone ever has to delete the

Jobstable, delete it right before thejob-table-updaterCronJob triggers. This way, theJobstable will immediately be re-created for you.The

Disruption Uploaderis aCronJobthat is set to run every4 hours. All theUploaderjobs (disruption-uploader,alert-uploader,job-run-uploader, andjob-table-updater) run in the DPCR cluster in thedpcr-ci-job-aggregationnamespace, the current configuration can be found in theopenshift/continuous-release-jobsprivate repo underconfig/clusters/dpcr/services/dpcr-ci-job-aggregation. .When e2e tests are done the results are uploaded to

GCSand the results can be viewed in the artifacts folder for a particular job run.Clicking the artifact link on the top right of a prow job and navigating to the

openshift-e2e-testfolder will show you the disruption results. (ex..../openshift-e2e-test/artifacts/junit/backend-disruption_[0-9]+-[0-9]+.json).We only pull disruption data for job names specified in the

Jobstable inBigQuery. (see Job Primer for more information on this process)The disruption uploader will parse out the results from the e2e run backend-disruption json files and push them to the openshift-ci-data-analysis table in BigQuery.

We currently run a periodic disruption data analyzer job in the app.ci cluster. It gathers the recent disruption data and commits the results back to

openshift/origin. The PR it generates will also include a report that will help show the differences from previous to current disruptions in a table format. (example PR).Note, the read only BigQuery secret used by this job is saved in

Vaultusing the processes described in this HowTo.The static

query_results.jsoninopenshift/originare then used by the the matchers that thesamplersinvoke to find the best match for a given test (typically with “remains available using new/reused connections” or “should be nearly zero single second disruptions”) to check if we’re seeing noticeably worse disruption during the run.

How To Query The Data Manually

The process for gathering and updating disruption data is fully automated, however, if you wish to explore the BigQuery data set, below are some of the queries you can run. If you also want to run the job-run-aggregator locally, the README.md for the project will provide guidance.

Once you have access to BigQuery in the openshift-ci-data-analysis project, you can run the below query to fetch the latest results.

Query

The below queries are examples, please feel free to visit the linked permalinks for where to find the most up to date queries used by our automation.

| |

| |

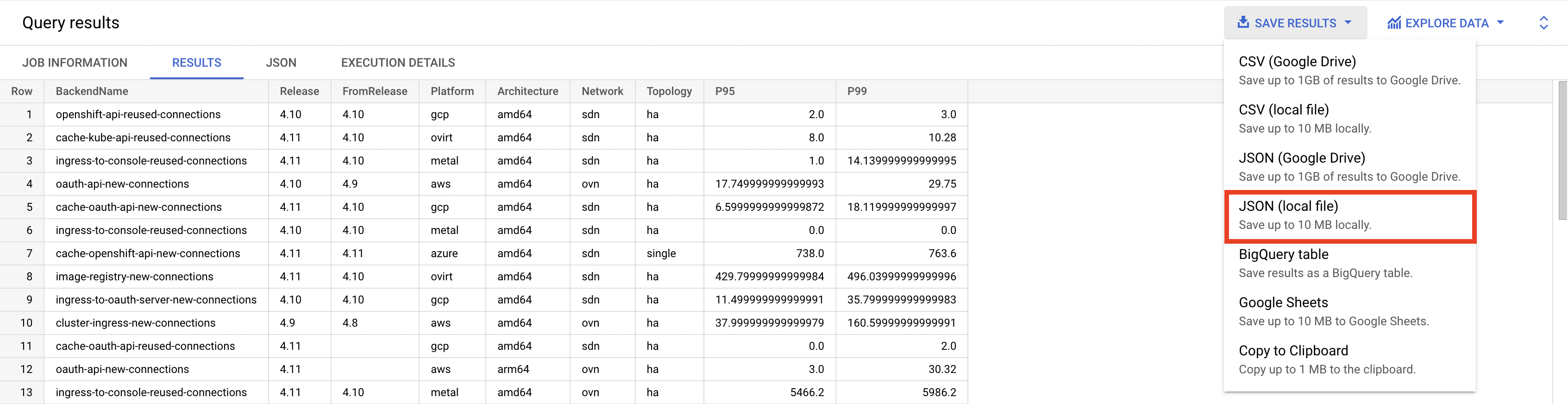

Downloading

Once the query is run, you can download the data locally.